For the past 10 years, I have been honored to participate as one of the voters for UltraRunning Magazine’s year-end (North American) Ultrarunner of the Year balloting. Recently, I have been asked by several people if there is any method I use in filling out my ballot. While the magazine intentionally provides no template for completing the ballots, over the years I have formulated my own method which seems to work pretty well for me.

For the past 10 years, I have been honored to participate as one of the voters for UltraRunning Magazine’s year-end (North American) Ultrarunner of the Year balloting. Recently, I have been asked by several people if there is any method I use in filling out my ballot. While the magazine intentionally provides no template for completing the ballots, over the years I have formulated my own method which seems to work pretty well for me.

The way the process works is that in mid-December Tropical John Medinger (former UltraRunning Magazine publisher and all-around ultra guru) sends each voter a detailed spreadsheet of every notable North American ultramarathon performance of the year. Typically a small group of us receives an advance copy of the spreadsheet to review and make sure nothing is omitted or inaccurate and then the document (typically about 50 pages long) is distributed to the full panel. Each panelist then has about 10 days to complete the ballot and return it to John. Having spoken to several members of the group over the years, it has become clear to me that there is not one single acceptable process for balloting. What I am confident of is that each voter takes the responsibility very seriously and typically spends 20 to 30 hours in completing the process.

My approach is both science and art and one which I have refined over the years. I begin with a thorough reading of the entire spreadsheet highlighting in different-color highlighter pens (yes, I print the spreadsheet out and work on actual paper) such things as North American and world records, course records, significant results in large/prestigious events, and any other results that catch my eye.

Following that general review of the spreadsheet, I then begin looking at each individual runner’s full body of work for the year. In that process I consider how many events were completed, how many (if any) DNFs the athlete had, what were the competitive levels of the chosen events, and how the runner did overall relative to the field in those events. Once that review is complete, I create a list of approximately 15 to 20 runners of each gender that I think could likely find a place in my top 10. This list is not ordered at all, rather it is just a brain dump based on the spreadsheet data.

With the initial lists created, I step away from the process for two to three days to just let the basic data sink in and after that break I return to each list and begin setting about to order the runners into a ranking. In creating this initial ranking, I give scores on a 1 to 5 continuum for each race result. A score of 1 might be a first-place finish at an off-season fatass run while a score of 5 might be a top-three finish in a competitive race like The North Face Endurance Challenge 50 Mile Championships or UTMB. For DNFs I assign a score of zero. This scoring system is, of course, somewhat subjective but now that I have 10 years worth of my own balloting data, I can compare scores for results from other years and assign them similarly. After adding up each runner’s point totals and dividing that by the total number of events, I rank the full list and order it.

With that ordered ranking complete, I then compare head-to-head performances. Obviously, not every athlete has competed with every other athlete in the ranking, but where there are head-to-head comparisons, I take them into account and adjust the rankings accordingly. Typically, head-to-head comparisons tend to move runners one or two spots in either direction.

Some observers over the years have asked if I have any inherent biases in my process such as weighing trail runs more heavily than road or track runs or 100-mile races over shorter-distance races. In truth, I don’t have any explicit biases. However, in my rankings of certain events, I may use some subjective judgement that could be seen as a bias and I suspect that other voters do so, as well. That subjective judgement, or ‘gut test,’ is typically the last stage in my ranking and in those cases I may move an athlete to a different place solely on gut feeling. These occasions are very rare and typically only involve moving a runner up or down one space in the rankings.

With less than a week to go until we receive our ballots from John, I know many of us are beginning to think about our votes. From my perspective, it seems to get more challenging to do these rankings every year but it is also a wonderful year-end ritual which provides a great opportunity for reflection on the sport that means so much to me.

Bottoms up!

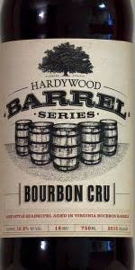

AJW’s Beer of the Week

This week’s Beer of the Week comes from Hardywood Park Craft Brewery in Richmond, Virginia. Hardywood’s Bourbon Barrel Cru is a classic Belgian-style Quadrupel ale with a delicious smoky and nutty flavor and a smooth texture which is simply scrumptious on a frosty winter’s night.

This week’s Beer of the Week comes from Hardywood Park Craft Brewery in Richmond, Virginia. Hardywood’s Bourbon Barrel Cru is a classic Belgian-style Quadrupel ale with a delicious smoky and nutty flavor and a smooth texture which is simply scrumptious on a frosty winter’s night.

Call for Comments (from Meghan)

- If you were to be a voter on this panel, what methods do you think you might use to create a women’s and men’s top-10 list?

- And if you are a voting member on the panel, might you share how you go about casting your votes for (North American) Ultrarunner of the Year?